In 1988, the Morris Worm became one of the first large-scale internet security incidents, spreading through vulnerable Unix services in under 24 hours. By today’s standards, the code was quite crude. Yet, the disruption highlighted a new reality. Software itself could be weaponized.

Nearly four decades later, malware attacks no longer rely solely on blunt replication. They operate as multi-stage adaptive systems that embed themselves into trusted chains, abusing tools that are already present on endpoints and mutating their code to remain undetectable. And we’ve seen this in recent events. In February 2025, attackers drained over $1.5 billion from Bybit in what analysts called the “largest cryptocurrency heist to date”.

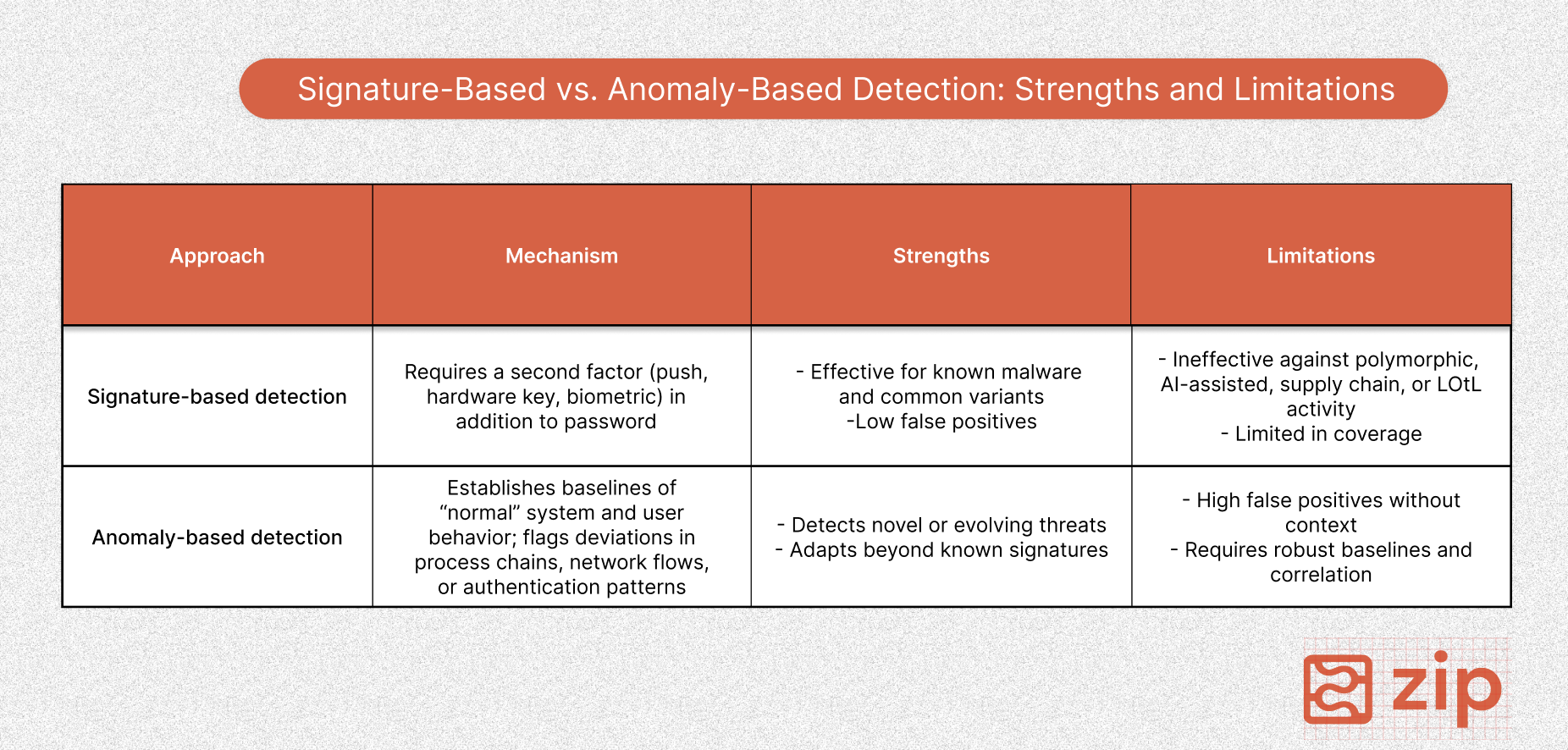

Traditional defenses built on signature-based detection (matching known byte patterns or file hashes) are no match for the attacks today. The 2025 *Verizon Data Breach Investigations Report* reported that the exploitation of software vulnerabilities has grown by 34% year-over-year. With the average global breach priced at $4.4 million, this is a serious threat that teams must address.

The shift is now underway toward observability-driven security, where rich telemetry, behavioral baselines, and contextual correlation provide the visibility needed to catch threats that lack traditional indicators. The sections that follow trace this shift. First, we’ll map the current malware landscape. Then, we’ll show how observability can keep the malware contained. And finally, we’ll lay out the steps for practical implementation. Let’s begin.

Modern malware incidents typically occur through one of three recurring strategies: abusing local tooling, injecting through dependencies, and mutating at scale. We’ll examine each one below.

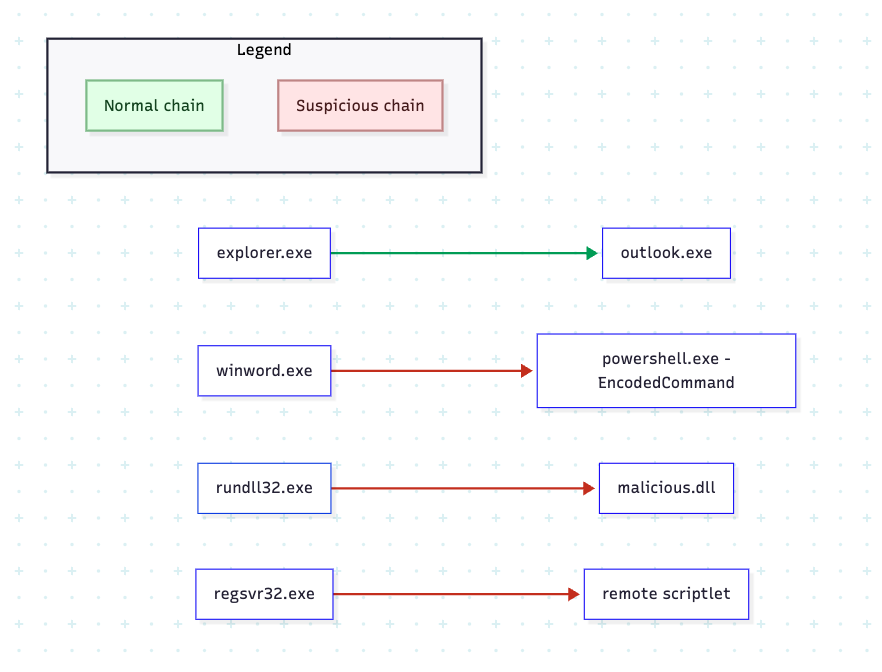

Malware authors increasingly avoid dropping foreign binaries. Instead, they utilize what is already present on a host. These are things like administrative tools or management frameworks that operating systems rely on daily. This strategy, known as Living off the Land (LOtL), hijacks binaries that defenders cannot simply block.

Mermaid Code

flowchart LR

%% Normal chain

EXPL[explorer.exe] --> OUTL[outlook.exe]

%% Suspicious chains

WINW[winword.exe] --> PS[powershell.exe -EncodedCommand]

RUND[rundll32.exe] --> DLL[malicious.dll]

REG[regsvr32.exe] --> SCR[remote scriptlet]

%% Legend

subgraph Legend

L1["Normal chain"]:::legendNormal

L2["Suspicious chain"]:::legendSusp

end

%% Styles

classDef legendNormal fill:#e8ffe8,stroke:#90c290,color:#1f4d1f;

classDef legendSusp fill:#ffe8e8,stroke:#c29090,color:#4d1f1f;

%% Edge colors

%% explorer -> outlook

linkStyle 0 stroke:#22a65a,stroke-width:2px;

%% winword -> powershell

linkStyle 1 stroke:#c23616,stroke-width:2px;

%% rundll32 -> dll

linkStyle 2 stroke:#c23616,stroke-width:2px;

%% regsvr32 -> scriptlet

linkStyle 3 stroke:#c23616,stroke-width:2px;

The mechanism is straightforward. Instead of delivering an obvious payload, attackers issue commands through trusted interpreters. PowerShell, for instance, can download scripts in memory using flags like -EncodedCommand or -ExecutionPolicy Bypass . Similarly, Windows Management Instrumentation (WMI) supports remote execution through event subscriptions. Tools such as rundll32 or regsvr32 can be abused to load libraries without leaving behind installer traces. Because these tools are signed by the OS, they pass whitelisting checks and rarely trigger traditional antivirus alerts.

We’ve seen this in the NotPetya outbreak in 2017. Once inside the network, the malware used stolen credentials to propagate laterally via PsExec and WMI, both legitimate administrative mechanisms. For defenders, the challenge is more than just spotting the execution of these binaries. They must distinguish routine administration from malicious intent.

Traditional signature-based detection falters here because there is no “bad file” to match. The executables are legitimate. Therefore, the maliciousness lies in context, **which is quite difficult given that the scripts look identical whether they are being invoked by an administrator for maintenance or an attacker.

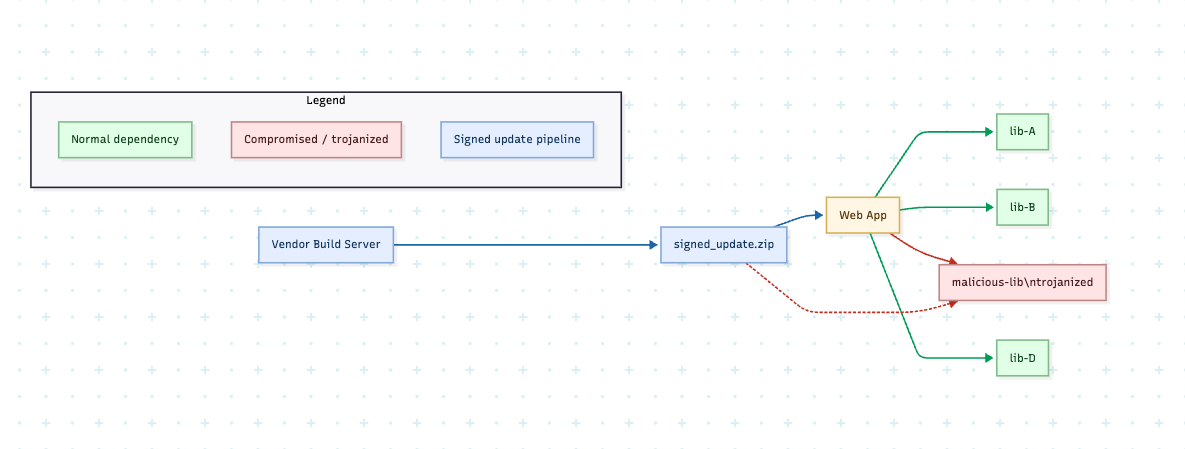

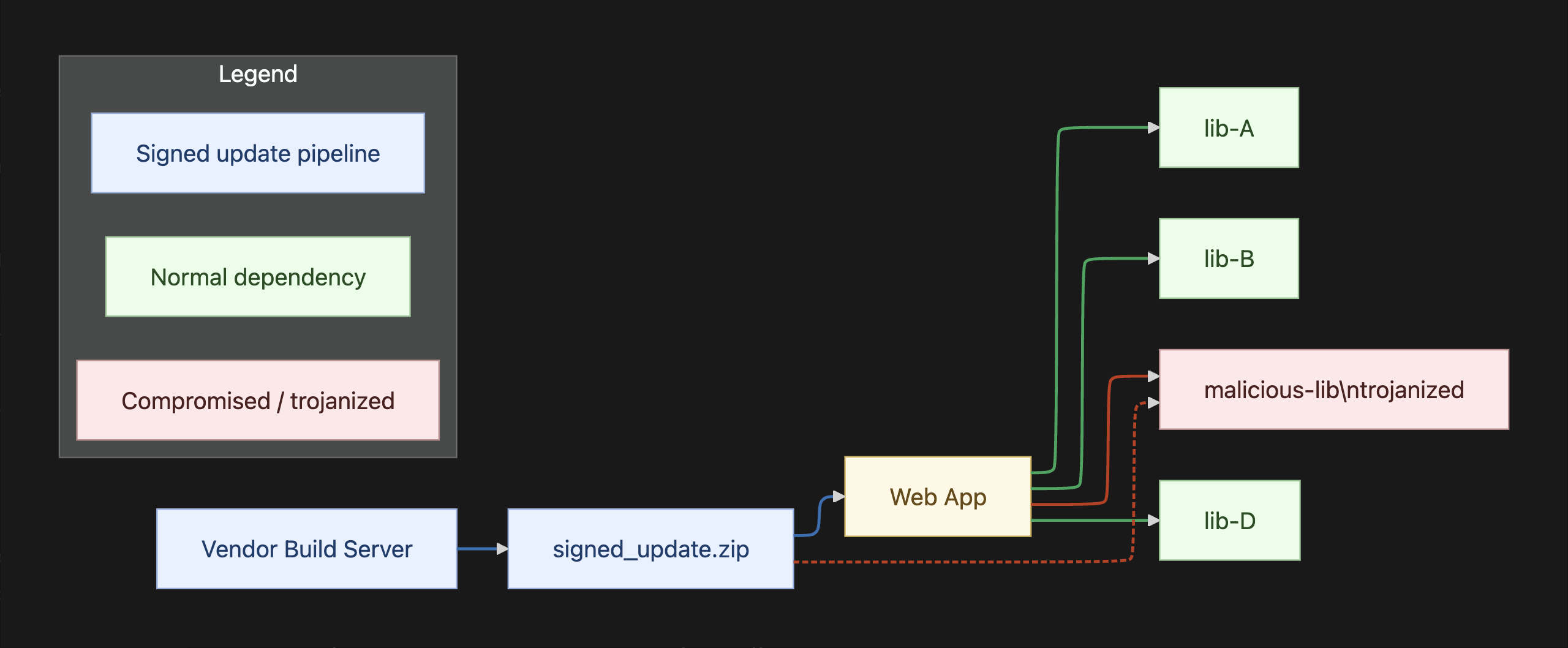

Where living off the land attacks exploit trust in local binaries, supply chain attacks operate one layer higher. They compromise the external software that organizations integrate into their environments. In this model, the “payload” is delivered through an update mechanism or an open-source dependency. Because organizations implicitly trust these sources, malware can bypass perimeter defense to embed itself into the existing workflows.

Mermaid Code

flowchart LR

%% Central app and dependencies

WEB[Web App]

LIB_A[lib-A]

LIB_B[lib-B]

MAL["malicious-lib\ntrojanized"]

LIB_D[lib-D]

%% Vendor/update pipeline

VENDOR[Vendor Build Server]

UPDATE["signed_update.zip"]

%% Dependency edges (normal vs. compromised)

WEB --> LIB_A

WEB --> LIB_B

WEB --> MAL

WEB --> LIB_D

VENDOR --> UPDATE

UPDATE --> WEB

UPDATE -.-> MAL

%% Legend / styles

subgraph Legend

N["Normal dependency"]:::normal

C["Compromised / trojanized"]:::compromised

S["Signed update pipeline"]:::vendor

end

classDef normal fill:#e8ffe8,stroke:#90c290,color:#1f4d1f;

classDef compromised fill:#ffe8e8,stroke:#c29090,color:#4d1f1f;

classDef vendor fill:#e8f0ff,stroke:#90b0e0,color:#173d6b;

classDef webnode fill:#fff8e6,stroke:#d9b85a,color:#6b4a11;

class LIB_A,LIB_B,LIB_D normal;

class MAL compromised;

class VENDOR,UPDATE vendor;

class WEB webnode;

%% Edge colors

%% WEB -> lib-A (normal)

linkStyle 0 stroke:#22a65a,stroke-width:2px;

%% WEB -> lib-B (normal)

linkStyle 1 stroke:#22a65a,stroke-width:2px;

%% WEB -> malicious-lib (compromised)

linkStyle 2 stroke:#c23616,stroke-width:2px;

%% WEB -> lib-D (normal)

linkStyle 3 stroke:#22a65a,stroke-width:2px;

%% VENDOR -> signed_update (pipeline)

linkStyle 4 stroke:#2b6fb4,stroke-width:2px;

%% signed_update -> WEB (pipeline)

linkStyle 5 stroke:#2b6fb4,stroke-width:2px;

%% signed_update -.-> malicious-lib (trojan included)

linkStyle 6 stroke:#c23616,stroke-dasharray:4 2,stroke-width:2px;

The depth of modern supply chains has made this issue much more difficult than it was in the past. A single web application can depend on thousands of open-source libraries, each one being a potential vulnerability.

In 2020, the SolarWinds Orion Compromise introduced malicious code through a signed vendor update, letting attackers access U.S. government networks. Defenders here faced the same problems as with LOtL. A SolarWinds update signed with a valid certificate looks no different from a legitimate patch.

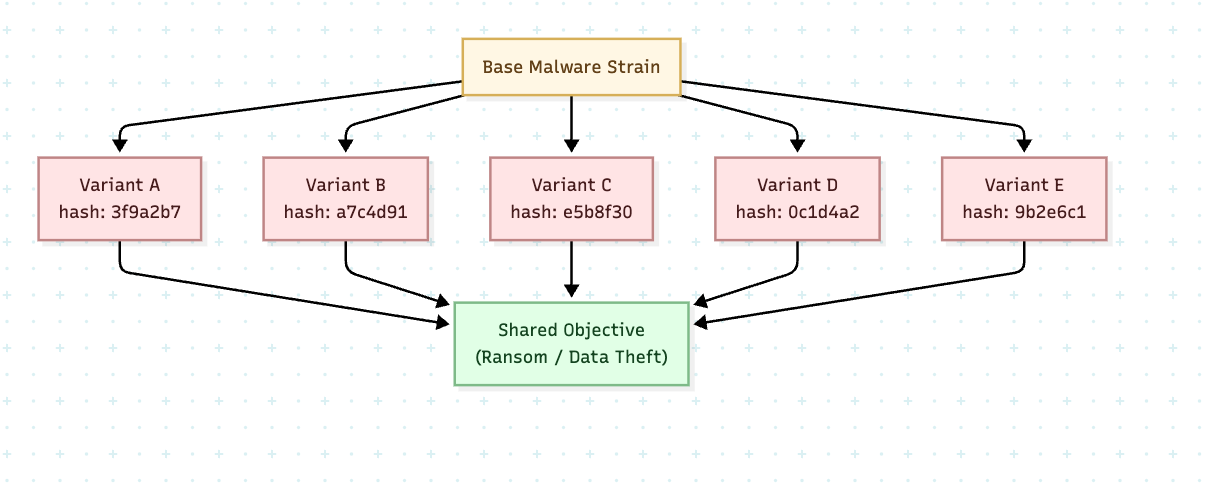

While living off the land and supply chain attacks exploit existing trust relationships, polymorphic malware eliminates predictability. They do this by dynamically altering their code between infections so that no two samples look the same. This tactic predates even modern AI, with campaigns like the Storm Worm in 2007 already using self-modifying code to evade email filters. The integration of machine learning has now amplified its reach.

Mermaid Code

flowchart TD

BASE[Base Malware Strain]

BASE --> V1["Variant A<br/>hash: 3f9a2b7"]

BASE --> V2["Variant B<br/>hash: a7c4d91"]

BASE --> V3["Variant C<br/>hash: e5b8f30"]

BASE --> V4["Variant D<br/>hash: 0c1d4a2"]

BASE --> V5["Variant E<br/>hash: 9b2e6c1"]

%% Shared objective

V1 --> GOAL["Shared Objective<br/>(Ransom / Data Theft)"]

V2 --> GOAL

V3 --> GOAL

V4 --> GOAL

V5 --> GOAL

%% Styles

classDef base fill:#fff8e6,stroke:#d9b85a,color:#6b4a11;

classDef variant fill:#ffe8e8,stroke:#c29090,color:#4d1f1f;

classDef goal fill:#e8ffe8,stroke:#90c290,color:#1f4d1f;

class BASE base;

class V1,V2,V3,V4,V5 variant;

class GOAL goal;.svg)

In a typical polymorphic attack campaign, the malicious payload is encrypted with a new key generated for each distribution. Antivirus systems that rely on hash values are defeated because no stable signature exists across variants. With AI, models can generate endless variations of phishing lures or dynamically adjust behavior based on the environment they encounter. Proof-of-concepts, such as BlackMamba, show how attackers can automatically produce unique malware samples at scale.

Just like the previous attacks, the technical challenge lies in separating harmless variance from malicious intent. A polymorphic ransomware strain, for example, might change its encryption routine with each infection, but its goal state (creating ransom notes and locking files) remains consistent. Traditional defenses that are reactive by design cannot keep pace with these attacks (that will only become more sophisticated as time progresses).

The next step is pragmatic. We need to build observability into detection and response pipelines.

Observability refers to the ability to infer the internal state of a system from its outputs. The concept originates in control theory, where a system is considered observable if its hidden state can be reconstructed from external signals.

When monitoring applications, we want to track outputs that allow us to ask questions like “What requests are failing?” or “Where is latency introduced in the service path?” **Security observability uses telemetry that is already flowing in the system to answer these questions.

The raw materials are familiar:

Together, these signals allow systems to reason about behavior over time, across systems, and in relation to baselines. For example, a sudden surge in PowerShell script executions correlated with abnormal authentication attempts could signal a living-off-the-land attack. Or a trusted update initiating network connections outside its expected domains could signal a supply chain compromise.

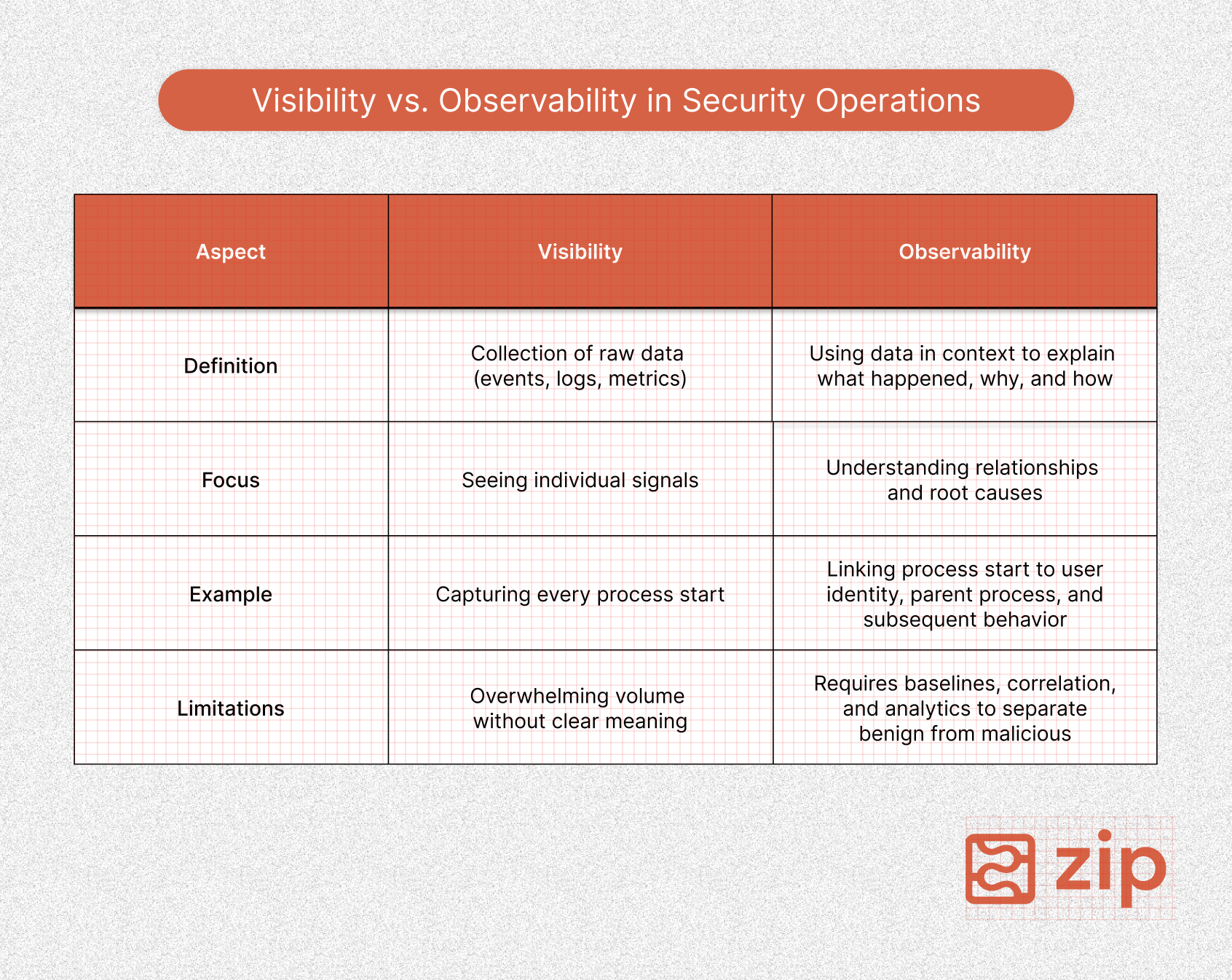

It is important to distinguish visibility from observability. Visibility is the collection of raw data (you can see the events), while observability lets you use the raw data in context (you can explain what happened and why).

A system may have visibility into every process start, but without linkage, defenders cannot infer intent. Observability couples raw data with context so that we can spot deviations from normal patterns. Machine learning helps extend that principle by correlating signals across metrics, logs, and traces while also surfacing anomalies that align with known attacks (i.e. lateral movement attempts linked with unusual network traffic).

Traditional defenses that revolve around signature-based detection are reactive, but anomaly-based detection monitors activity to catch novel or modified threats. And because metrics, logs, and traces are linked, developers can reconstruct the journey of an attack to understand scope and impact.

How can we operationalize all of this?

Turning observability from theory into practice requires two steps.

Without the first, anomaly detection collapses under false positives. Without the second, insights remain academic.

Every environment has a definition of “normal.” On endpoints, this means familiar parent-child process chains, like explorer.exe launching outlook.exe, versus unusual ones, such as winword.exe spawning powershell.exe. On networks, it means predictable flows between web servers and databases, rather than workstations reaching out to domain controllers. Even CPU, memory, and disk I/O follow repeatable patterns.

Capturing these norms requires telemetry. Windows process creation events, Sysmon process and network logs, PowerShell script block logging, and authentication events show where and how users interact with these systems, so we must make use of them.

Baselines alone are not enough. They must be filtered through context. Administrators may legitimately use PsExec or WMI where office staff should not, and nightly update jobs may trigger spikes that would be suspicious at other times. Risk-based scoring helps prioritize real incidents.

Different attack categories leave different footprints. Observability makes them detectable by monitoring the right signals.

Detecting LOtL attacks requires attention to signals that reveal abuse rather than normal administration:

EncodedCommand or ExecutionPolicy BypassWith supply chain attacks, systems must watch for behavior that falls outside the expected path:

Polymorphic malware mutates constantly, but certain runtime behaviors remain stable across variants:

Attacks unfold as chains rather than isolated steps: initial access, execution, persistence, lateral movement, and finally, objective. Observability provides the connective tissue across these phases by linking endpoint events with authentication logs and network traces.

What might look like a legitimate process becomes suspicious when correlated with an unusual logon and an outbound data transfer. This correlation is also how defenders measure improvement. Mean Time to Detect (MTTD), Mean Time to Acknowledge (MTTA), and Mean Time to Recover (MTTR) become practical metrics for evaluating whether observability reduces dwell time and speeds containment.

Building an observability-driven defense is not simply about collecting more data. The hard part lies in structuring telemetry into pipelines that balance coverage with cost, analysis with noise reduction, and automation with human judgment. Raw visibility without structure overwhelms teams. Structured observability, on the other hand, makes attacks legible and responses actionable.

For teams evaluating how to operationalize this shift, the next steps are pragmatic:

Zip Security consolidates device management, endpoint security, identity, and compliance into a single, opinionated platform. By integrating with providers like CrowdStrike, Jamf, Intune, and Okta, it brings telemetry and controls under one interface, making it easier for teams to correlate events, which ultimately simplifies both detection and response.

Regardless of which solution you use, the larger point remains vendor-agnostic. Without observability, defenders are blind to the attacks that matter most. With it, they can transform overwhelming streams of raw data into actionable narratives that contain damage.